Introduction and investment thesis

Nvidia Corporation’s (NASDAQ:NVDA) latest earnings report had been eagerly awaited by the investor community. The main question was whether the chip giant could make up for diminishing revenues from China amid strengthening U.S. export regulations. As China consistently accounted for 20-25% of the company’s revenues, this wasn’t obvious at first sight. However, as it turned out, there is still ample demand for Nvidia’s HGX AI supercomputing platform, which combines Nvidia GPUs with its in-house interconnect and networking technologies.

In my latest article on the company (Nvidia: Diving Deep Into Its Technological Supremacy), I argued that the several years of experience in building an infrastructure (both hardware and software) for accelerated computing could grant Nvidia the market-leading position for the years to come. The recent earnings print and some other important developments since then have confirmed my thesis, with my bull-case valuation scenario slowly turning into my base-case one.

Tight supply dominates China setback

Nvidia reported revenues of $22.1 billion for its FY24 Q4 quarter, surpassing the $20.5 billion average analyst estimate. Of course, the beat was driven by better-than-expected performance in the company’s data center unit, where sales reached $18.4 billion, surpassing the average estimate of $17.2 billion.

Based on the company’s FY25 Q1 sales guidance of $24 billion (vs. 22 billion analyst estimate), the market for GPUs and networking solutions is far from running out of steam, as this would mean continued QOQ acceleration on the top line. And all this, with revenues from China dropping to mid-single digits within the data center segment coming in ~$2.5-$3.5 billion shorter than assuming previous share of 20-25%.

According to CEO Jen-Hsun Huang, the supply of the Nvidia HGX platform remains still constrained as “demand far exceeds supply.” This means that Nvidia could’ve rechanneled those shipments originally intended for the Chinese market to other customers, thereby avoiding the decrease of exports to China to materially impact the top line.

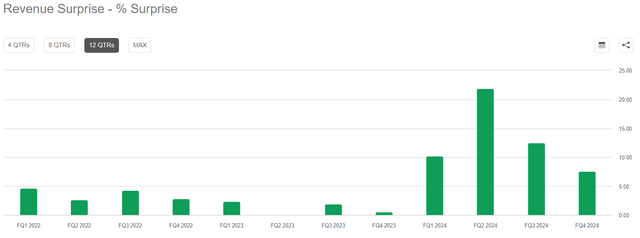

Based on this, it’s very challenging to estimate the true demand for the company’s solutions as quarterly revenue figures are still capped by supply constraints. However, at the same time this has an important smoothening effect on revenues, making it less likely that suddenly they will fall off a cliff. Looking at the magnitude of recent revenue beats also shows that quarterly sales figure become more and more predictable as time passes:

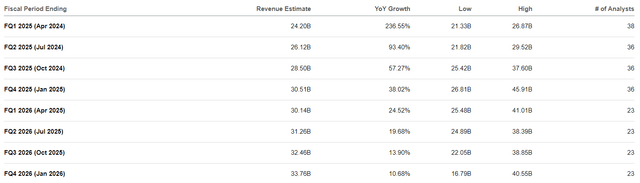

Seeking Alpha

In FY24 Q4, Nvidia beat analyst’s revenue estimates by 7.5%, while this has been 12.5% and 22% in the Q3 and Q2 quarters, respectively. This shows that the magnitude to which supply constraints can influence quarterly revenues decreases gradually, making management’s revenue estimates more reliable. In my experience, most high growth tech companies try to keep a ~5% buffer above their public guidance to stay conservative and deliver a positive surprise. Based on this, I believe Nvidia management expects Q1 revenues to come in a little north of $25 billion, ~5% higher than the $24 billion management guidance. In my opinion, this suggests that current analyst estimates are still too conservative for the upcoming quarters:

Seeking Alpha

As long as Nvidia hasn’t eliminated supply constraints, I assume that revenues should continue to grow by $2-3 billion dollars sequentially as the company continues to solve its capacity constraints. This could result in revenues of $27-28 billion for the Q2 quarter compared to the current consensus of $26.1 billion.

However, the most important question is, what happens when supply constraints will be eliminated, how long could demand keep up with its current strong pace. I believe there are several signs, which point into the direction that revenues shouldn’t fall off a cliff when we reach this point in a few quarters.

Growth pillars hold strong

Probably the most important reason for this is that hyperscalers – who made up more than half of Nvidia’s revenues in the most recent quarter – and large consumer Internet companies have very ambitious plans for 2024 regarding their AI infrastructure. This could have been evidenced in their recent earnings calls where everyone talked about significantly increasing CapEx led by investments in AI infrastructure. Here are a few examples, the first from Meta Platforms (META):

“Turning now to the CapEx outlook. We anticipate our full year 2024 capital expenditures will be in the range of $30 billion to $37 billion, a $2 billion increase of the high end of our prior range. We expect growth will be driven by investments in servers, including both AI and non-AI hardware, and data centers as we ramp up construction on sites with our previously announced new data center architecture.” – Mark Zuckerberg, Meta Founder and CEO on 2023 Q4 earnings call.

After $28 billion CapEx spending for 2023, Meta increased the stakes significantly for 2024 and increased the high end of its guidance range to $37 billion. As most of these investments go into servers and data centers, Nvidia should be a key beneficiary.

The same applies for Alphabet (GOOG), where management has the following to say:

“The step-up in CapEx in Q4 reflects our outlook for the extraordinary applications of AI to deliver for users, advertisers, developers, cloud enterprise customers and governments globally and the long-term growth opportunities that offers. In 2024, we expect investment in CapEx will be notably larger than in 2023.” – Ruth Porat, Alphabet CEO on 2023 Q4 earnings call.

Finally, a quick one from Microsoft (MSFT):

“We expect capital expenditures to increase materially on a sequential basis, driven by investments in our cloud and AI infrastructure…” – Amy Hood, Microsoft CFO on 2024 Q2 earnings call.

I believe all these statements confirm that the demand for Nvidia’s GPUs and networking solutions will remain strong over the upcoming quarters.

Another interesting factor that could contribute to continued positive revenue surprises is the Chinese market, where Nvidia plans to begin the mass production of H20, L20, and L2 chips developed specifically for the Chinese market in Q2 this year. As I mentioned earlier, Nvidia temporarily loses $2.5-$3.5 billion per quarter due to strengthened U.S. export restrictions, which the company tries to circumvent with its new product line. As China still struggles with the mass production of state-of-the-art AI chips, I believe there should be renewed demand for Nvidia’s AI chips in China, if the U.S. doesn’t tighten restriction further. This could be also an important driver of potential future upside for revenues.

Speaking of new product lines Nvidia will begin to ship its Spectrum-X ethernet-based networking solution this quarter, which could be also a multibillion-dollar business. The company’s networking business led by InfiniBand reached a $13 billion annualized revenue run rate by the end of the Q4 quarter, which could continue to grow at a strong clip.

Finally, looking at the software side, Nvidia AI Enterprise and DGX Cloud reached an annualized revenue run rate of more than $1 billion for the end of the year. As Nvidia’s GPUs and networking solutions penetrate the market, there will be an increasing need for supporting this infrastructure, which could be also a multibillion-dollar business soon led by Nvidia AI Enterprise. Meanwhile, DGX Cloud, Nvidia’s AI-training-as-a-service platform will soon partner with AWS as well joining other major cloud providers. These solutions should be also an important driver of future growth, providing a much-needed recurring revenue stream for the company.

Although one used to think of software revenues when it comes to recurring revenues, hardware revenues are also recurring in a sense. Looking at recent earnings calls of hyperscalers, the remaining useful life of their servers and networking equipment lies somewhere around 5-6 years, which means they have to be replaced with such a frequency. This means that even if Nvidia AI-related revenues would peak somewhen in 2025, a refreshment cycle would already begin a few years later. Especially, in the case of such an emerging technology where technological advancements are quite rapid this could happen sooner than later.

Last but not least, a very important question is what will happen with the $1 trillion installed base of data centers, which Jen-Hsun Huang repeatedly calls out on the company’s earnings calls. If a significant part of these data centers will switch to GPU-based computing instances instead of CPU-based ones to utilize energy and cost efficiencies from accelerated computing, this could be another important growth driver for Nvidia over the upcoming years.

In the light of the above, I believe Nvidia is still at the beginning of its journey in accelerated computing and should define this field for upcoming years.

Risk factors

As the world began to rush for AI, companies with billions of dollars of cash on hand began to invest heavily in creating their own AI infrastructure, including custom AI chips. This should increase competition materially in the space over the upcoming years, which is an important risk factor for Nvidia. However, as I summarized in my last article on the company, Nvidia has years of technological advantage, which won’t be easy to catch up to.

Another important risk factor for Nvidia is its significant dependency on Taiwan Semiconductor (TSM), as the company has most of its operations in Taiwan. In case of a potential Chinese military offensive against the country, Nvidia would suffer serious supply chain disruptions. Although TSMC is in works to mitigate this risk, it could take several years.

Finally, further export restriction from the U.S. government could be regarded as another important risk factor as well, which could suppress Nvidia’s Chinese business in the future.

Valuation

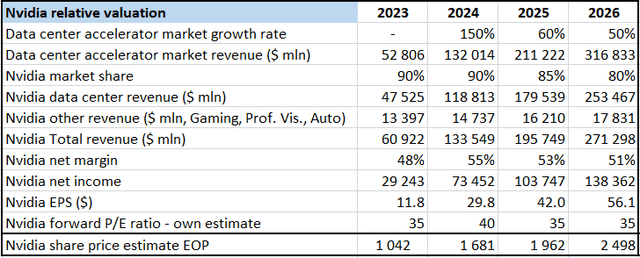

In my previous article on the company, I set up a detailed relative valuation framework with multiple scenarios, but now I will only discuss my base case scenario. Based on Lisa Su’s optimistic comments on data center accelerator market growth combined with external sources, I assume 150% growth for the entire market in 2024, falling back to 60% in 2025 and to 50% in 2026. I believe Nvidia will maintain its market-leading position over the upcoming years, with its market share slowly falling as competitors emerge.

According to management, gross margins will stabilize in the mid-70s over 2024, which could be enough to maintain a net margin of 50%+ over the upcoming years. This leads me to the following EPS estimates for the upcoming years:

Created by author based on own estimates

To attach a price multiple to these EPS estimates, I have used the forward P/E ratio, as it is a widely used valuation metric for mature, profit generating companies. Looking at the forward P/E ratio of Nvidia for the past couple of years shows the following picture:

Seeking Alpha, YCharts

We can see that as the denominator of the ratio, which is Earnings, rolled to the next financial year there has been a significant decline in the ratio at the beginning of 2023 and 2024. Currently, the ratio stands at 32.25, which seems to be at the lower end compared to the previous years. I believe that the multiple should gradually expand further over the course of 2024 as the fundamental momentum is far from running out of steam.

As Nvidia Corporation manages to continuously beat sales expectations, I think its valuation could expand hand in hand. This is why I assumed a forward P/E ratio 40 for the end of 2024 in my valuation framework, which results in an EOP share price of $1,681 with 2025 EPS of $42. This is my year-end price target for Nvidia Corporation, which would mean doubling from current levels.

I expect most of this performance resulting from better-than-expected revenues to lead toward higher profitability combined with a positive re-rating of shares. Although this could seem a bit aggressive for the first sight, I believe the market still underappreciates the impact the AI revolution and accelerated computing could have on Nvidia’s bottom line.

Read the full article here